Twitter is broadening access to a feature called Safety Mode, designed to give users a set of tools to defend themselves against the toxicity and abuse that is still far too often a problem on its platform. First introduced to a small group of testers last September, Safety Mode will today launch into beta for more users across English-speaking markets, including the U.S., U.K., Canada, Australia, Ireland and New Zealand.

The company says the expanded access will allow it to collect more insights into how well Safety Mode works and learn what sort of improvements still need to be made. Alongside the rollout, Safety Mode will also prompt users when they may need to enable it, Twitter notes.

As a public social platform, Twitter faces a continual struggle with conversation health. Over the years, it’s rolled out a number of tweaks and updates in an attempt to address this issue — including features that would automatically hide unpleasant and insulting replies behind an extra click; allow users to limit who could reply to their tweets; let users hide themselves from search; and warn users about conversations that are starting to go off the rails, among other things.

But Safety Mode is more of a defensive tool than one designed to proactively nudge conversations in the right direction.

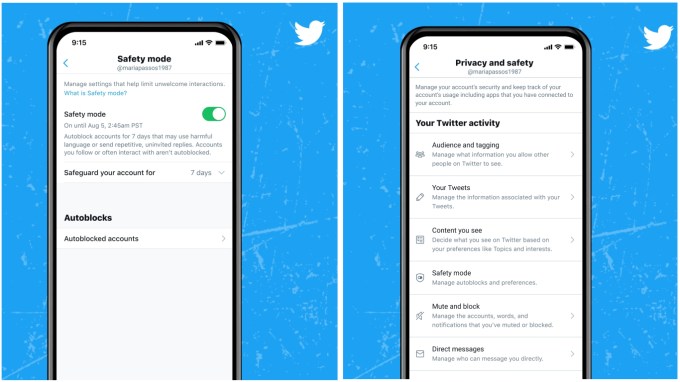

It works by automatically blocking accounts for seven days which are replying to the original poster with harmful language or sending uninvited, repetitive replies — like insults and hateful remarks or mentions. During the time that Safety Mode is enabled, those blocked accounts will be unable to follow the original poster’s Twitter account, see their tweets and replies or send them Direct Messages.

Image Credits: Twitter

Twitter’s algorithms determine which accounts to temporarily block by assessing the language used in the replies and examining the relationship between the tweet’s author and those replying. If the poster follows the replier or interacts with the replier frequently, for example, the account won’t be blocked.

The idea with Safety Mode is to give users under attack a way to quickly put up a defensive system without having to manually block each account that’s harassing them — a near impossibility when a tweet goes viral, exposing the poster to elevated levels of abuse. This situation is one that happens not only to celebs and public figures whose “cancelations” make the headlines, but also to female journalists, members of marginalized communities and even everyday people, at times.

It’s also not a problem unique to Twitter — Instagram launched a similar set of anti-abuse features last year, after several England footballers were viciously harassed by angry fans after the team’s defeat in the Euro 2020 final.

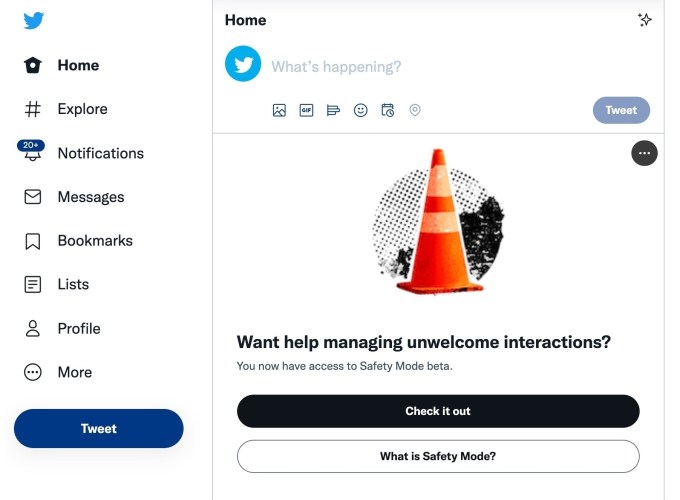

Based on early testers’ feedback, Twitter learned people want more help identifying when an attack may be getting underway, it says. As a result, the company today says the feature will also now prompt users to enable it when the system detects potentially harmful or uninvited replies. These prompts can appear in the user’s Home Timeline or as a device notification if the user is not currently on Twitter. This should help the user from having to dig around in Twitter’s Settings to locate the feature.

Image Credits: Twitter

Previously, Safety Mode was tested by 750 users during the early trials. It will now roll out the beta to around 50% of users (randomly selected) in the supported markets. It says it’s exploring how those users may give Twitter feedback directly in the app.

Twitter has not shared when it plans to make Safety Mode publicly available to its global users.

from TechCrunch https://ift.tt/NqJuE5j

via Tech Geeky Hub

No comments:

Post a Comment